Open-Sourcing FASHN VTON v1.5: Maskless Virtual Try-On in Pixel Space

We're open-sourcing FASHN VTON v1.5, a segmentation-free virtual try-on model that generates photorealistic results directly in pixel space.

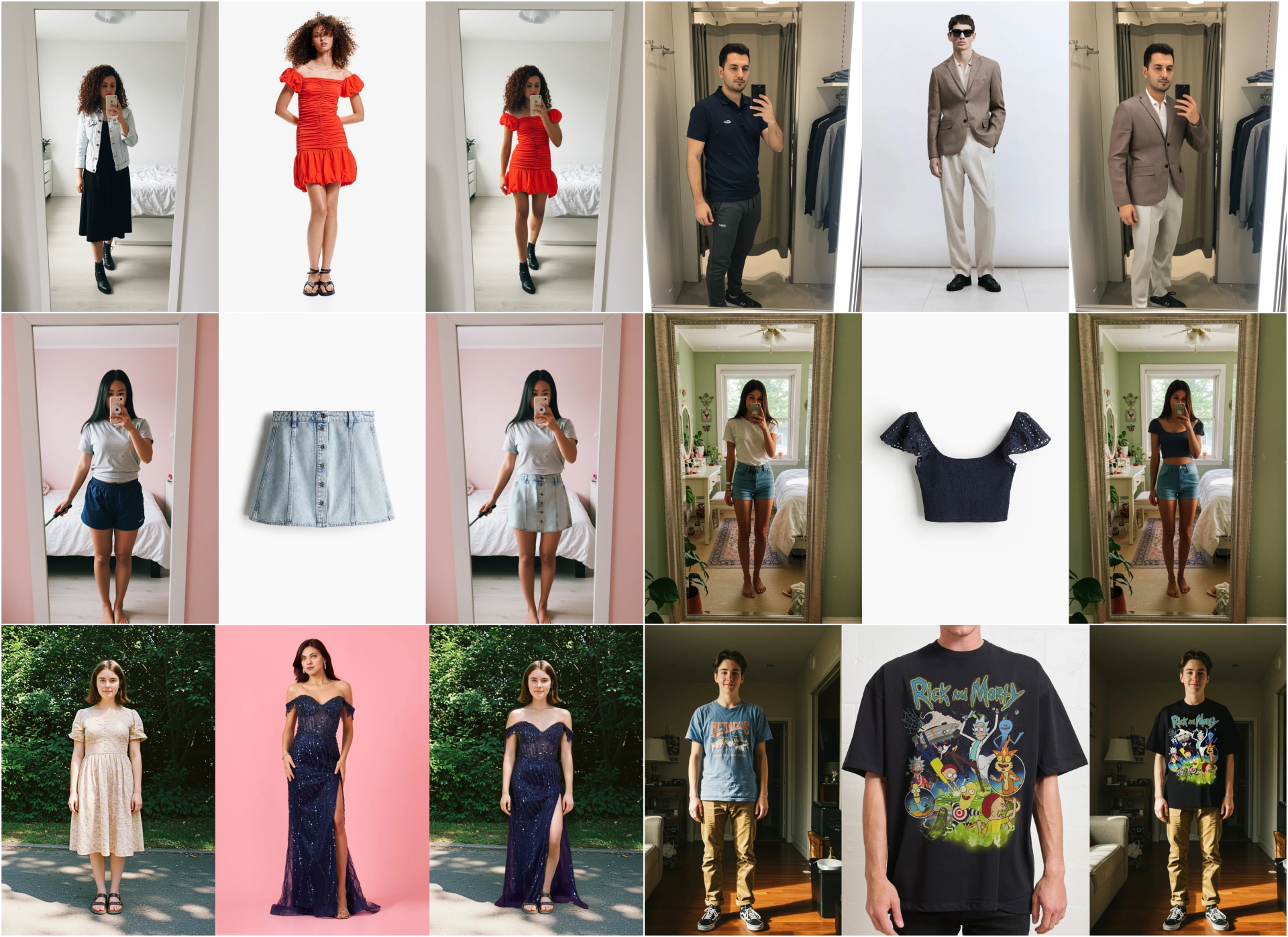

Today we're open-sourcing FASHN VTON v1.5, our virtual try-on model. Given an image of a person and an image of a garment, it generates a photorealistic image of that person wearing the garment.

This Apache-2.0 licensed release is for researchers, developers, and product teams who want to build on a production-proven virtual try-on baseline.

Virtual try-on results from FASHN VTON v1.5. Left columns show person and garment inputs, right columns show generated outputs. Works with both model-worn garments and flat-lay product shots.

What We're Releasing

We set out to open-source our proprietary virtual try-on system, and we're doing it piece by piece. The first component was the FASHN Human Parser, a segmentation model that identifies body parts and clothing regions. It serves as the foundation for virtual try-on, and we're now building on that foundation.

Today, we're releasing the core of our virtual try-on system:

- Model weights on Hugging Face

- Inference code on GitHub

- Project page with architecture and method details

Next up: an interactive demo where you can try virtual try-on directly in your browser, no code required.

And further down the road, we'll publish a technical paper covering the research, training process, and lessons learned from building production-ready virtual try-on pipelines.

What Makes FASHN VTON v1.5 Different?

Two architectural choices distinguish this model from most virtual try-on approaches:

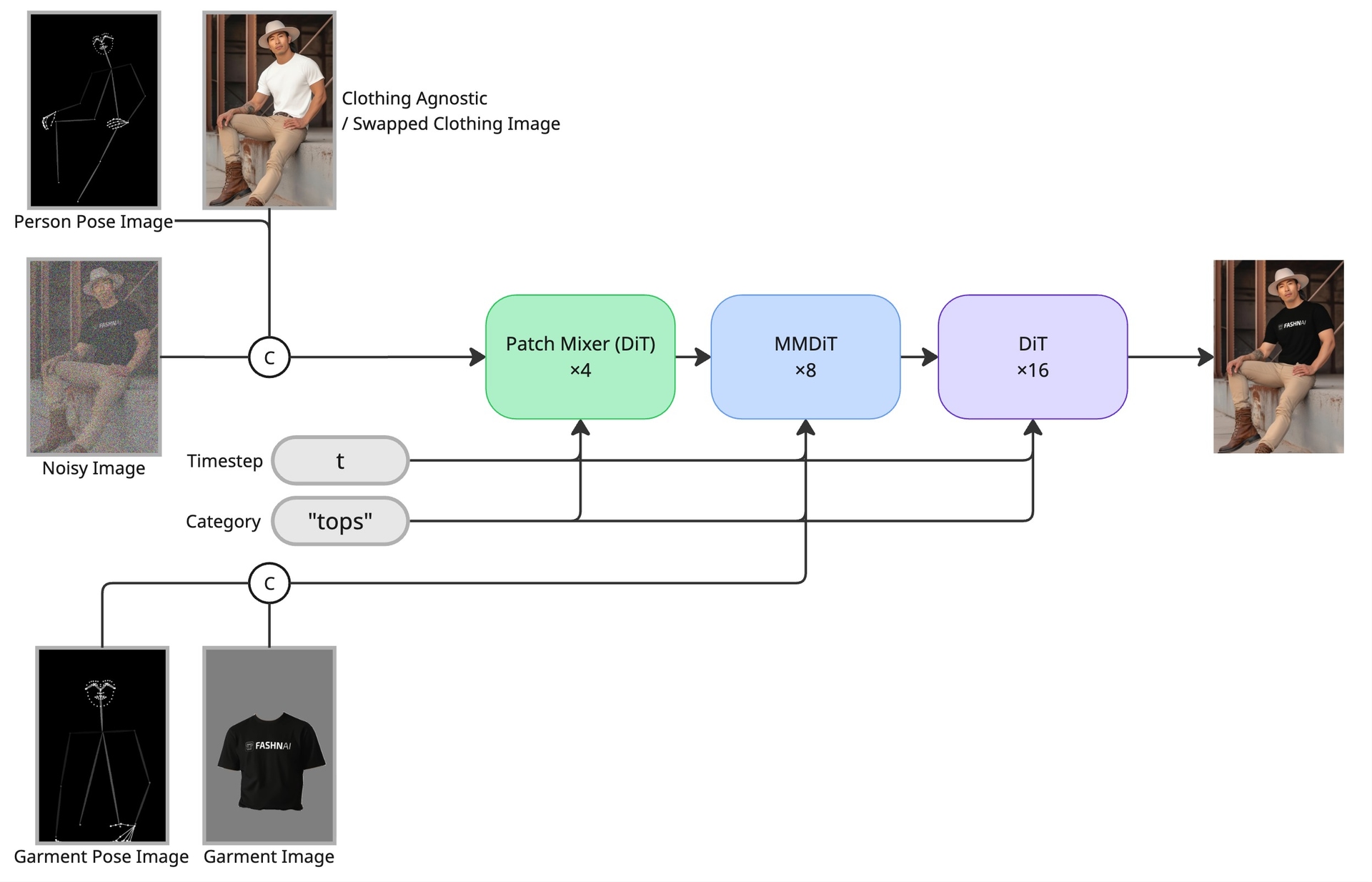

Pixel-Space Generation

Most diffusion-based image generation happens in latent space: the image is first encoded by a VAE, processed in a compressed representation, then decoded back to pixels. FASHN VTON v1.5 operates directly on RGB pixels. This eliminates the information loss that comes with VAE encoding and results in sharper, more accurate outputs, particularly for fine garment details like textures and patterns.

Pixel-space generation allows the front-facing graphic to warp naturally to the model's three-quarter pose while preserving every detail.

Maskless Inference

Traditional virtual try-on requires a segmentation mask to define where the garment should appear on the person. This creates two problems: the mask can be imprecise, and the output is constrained to the mask boundaries. A loose sweater, for example, can't extend beyond the silhouette of the original tight shirt.

FASHN VTON v1.5 generates in segmentation-free mode by default. Without a mask, the model learns to preserve body regions that shouldn't change (face, hands, unaffected clothing) while allowing the garment to take its natural form. The result is better body preservation and unconstrained garment volume. This is particularly useful for long, loose, or bulky garments and challenging occlusions like hair or accessories.

Before: masking biases the output toward the original clothing shape. After: segmentation-free inference lets the garment take its natural form.

Why Open-Source?

The AI industry is consolidating around massive generalist models. State-of-the-art solutions, including virtual try-on, are increasingly built into trillion-parameter systems that demand enterprise-scale resources. FASHN VTON v1.5 represents a different approach.

At 972M parameters, it runs on consumer GPUs. It needs no prompts, just images. It can be trained from scratch for $5,000 to $10,000. And it operates in pixel space, a rarity in open-source diffusion models. Despite this focus on efficiency, many users still consider it state-of-the-art for virtual try-on.

We're releasing it because independent researchers, fashion tech teams, and developers should have access to production-grade models they can study, extend, and deploy themselves. Our upcoming technical paper will share what we've learned about training and deploying specialized architectures like this one.

We hope this release helps demonstrate that focused, efficient models remain a viable path. The fashion tech ecosystem deserves more than API access to black boxes: models it can own, understand, and improve.

Project Page

To learn more about how FASHN VTON v1.5 works, including architecture details, method overview, and additional visual results, visit the project page.

FASHN VTON v1.5 architecture overview. See the project page for full details.

Quick Start

Clone the repository and install dependencies:

git clone https://github.com/fashn-AI/fashn-vton-1.5.git

cd fashn-vton-1.5

pip install -e .

python scripts/download_weights.py --weights-dir ./weights

Then run your first try-on:

from fashn_vton import TryOnPipeline

pipeline = TryOnPipeline(weights_dir="./weights")

result = pipeline(person_image, garment_image, category="tops")

result.images[0].save("output.png")

See the GitHub repository for full documentation, weight downloads, and configuration options.

What's Next

An interactive demo and a research paper are coming in the next few days. Star or watch the repository to get notified when they're available.

We'd love to see what you build. Join our Discord to share results, ask questions, and discuss the research. Tag us on X. Feedback and contributions welcome on GitHub.