Open-Sourcing FASHN Human Parser: Production-Grade Semantic Segmentation for Fashion

We're releasing a state-of-the-art human parsing model to the open-source community. A SegFormer-B4 fine-tuned for fashion applications, available via PyPI, HuggingFace, and GitHub. This is the first step in open-sourcing our virtual try-on stack.

Today we're open-sourcing FASHN Human Parser, a state-of-the-art semantic segmentation model built specifically for fashion and virtual try-on applications.

This release marks Phase 1 of our open-source initiative. The human parser is a critical foundation that our upcoming virtual try-on model will depend on. By releasing it first, we're giving developers a head start on integration while gathering community feedback.

What is Human Parsing?

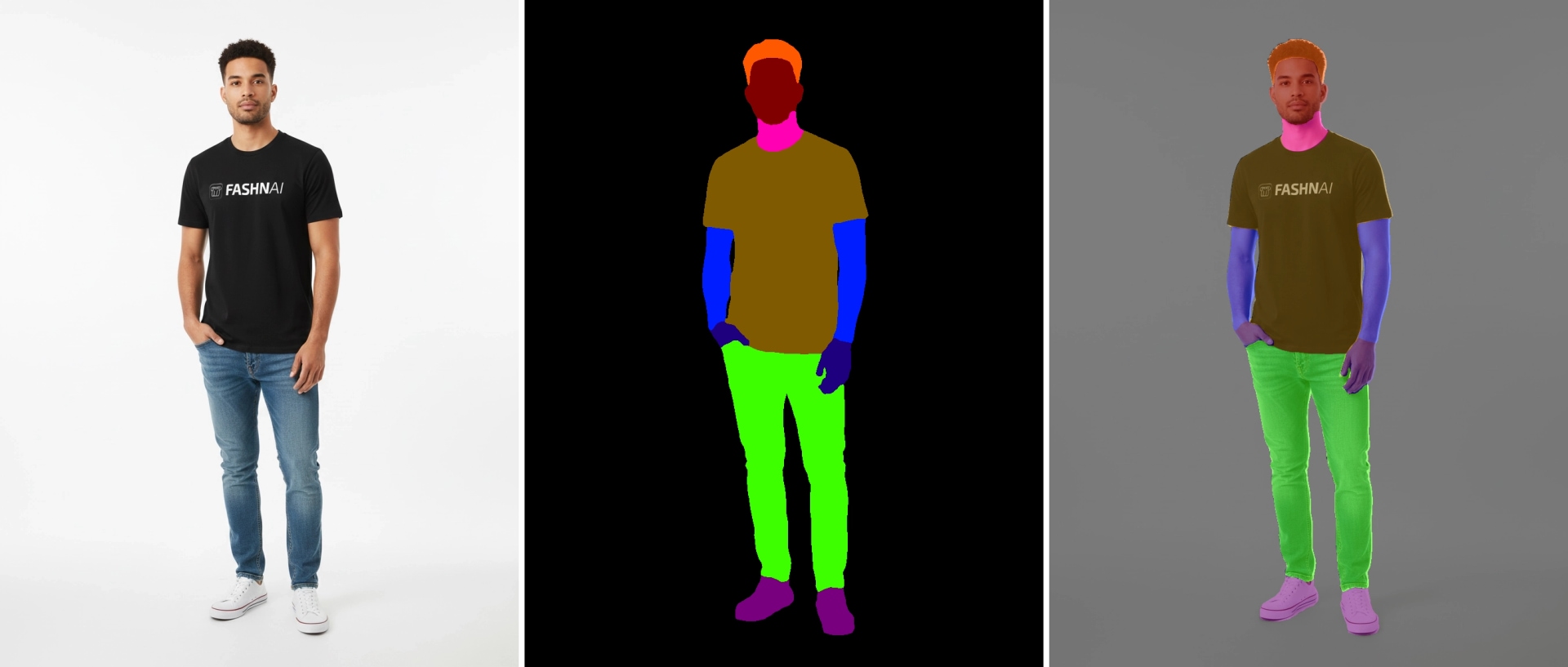

Human parsing is semantic segmentation for people. It divides a person in an image into meaningful regions: body parts like face, hair, arms, and hands; clothing items like tops, pants, and dresses; and accessories like bags, hats, and jewelry.

The model outputs a pixel-wise segmentation map with 18 semantic classes, visualized here as a colored mask and overlay.

For virtual try-on, this semantic map is essential. To digitally dress someone in new clothing, you need to know exactly where their current clothing is, where their body parts are, and what should be preserved (face, hair, accessories). Human parsing provides this information at the pixel level.

For a deeper look at the datasets commonly used to train human parsing models and their quality challenges, see our technical deep-dive on fashion segmentation datasets.

What We're Releasing

1. The Model

A SegFormer-B4 architecture fine-tuned on a carefully curated dataset, optimized specifically for fashion and virtual try-on applications. We've previously written about the quality issues in common public datasets like ATR, LIP, and iMaterialist. This model was trained to address those shortcomings.

| Specification | Value |

|---|---|

| Architecture | SegFormer-B4 (MIT-B4 encoder + MLP decoder) |

| Classes | 18 semantic categories |

| Input Size | 384 x 576 pixels |

| Model Size | ~244 MB |

HuggingFace: fashn-ai/fashn-human-parser

2. Python Package

A production-ready package that implements the exact preprocessing used during training for maximum accuracy.

pip install fashn-human-parser

PyPI: fashn-human-parser

3. Interactive Demo

A Gradio-based web demo where anyone can try the model without writing code.

Try it: HuggingFace Space

4. Full Source Code

Complete source code with documentation on GitHub.

GitHub: fashn-AI/fashn-human-parser

Getting Started

We've made the model easy to use with two integration paths. Choose based on your needs:

HuggingFace Pipeline

The fastest way to get started. Two lines of code, no additional dependencies beyond transformers.

from transformers import pipeline

pipe = pipeline("image-segmentation", model="fashn-ai/fashn-human-parser")

result = pipe("image.jpg")

The pipeline returns a list of dictionaries with label, score, and mask for each detected class.

PyPI Package (Recommended)

For production use, we recommend the dedicated Python package. It provides exact preprocessing, cleaner output, and useful utilities.

pip install fashn-human-parser

from fashn_human_parser import FashnHumanParser

parser = FashnHumanParser()

segmentation = parser.predict("photo.jpg")

Why use the package?

- Exact preprocessing: Uses

cv2.INTER_AREAresize, matching the training pipeline exactly. The HuggingFace version uses PIL LANCZOS for broader compatibility, which may produce slightly different results. - Cleaner output: Returns a single numpy array of shape

(H, W)with class IDs 0-17, already resized to match your input dimensions. - Batch processing: Process multiple images in a single forward pass with

parser.predict([img1, img2, img3]). - Label utilities: Includes

IDS_TO_LABELS,LABELS_TO_IDS, andIDENTITY_LABELSmappings for downstream tasks. - Flexible input: Accepts file paths, PIL Images, or numpy arrays.

The 18 Semantic Classes

Our label schema was developed specifically for virtual try-on applications, capturing the semantic regions most relevant for clothing transfer.

Color-coded legend for all 18 semantic classes.

| ID | Label | Category |

|---|---|---|

| 0 | background | - |

| 1 | face | Body (preserved in try-on) |

| 2 | hair | Body (preserved in try-on) |

| 3 | top | Clothing (upper) |

| 4 | dress | Clothing (full) |

| 5 | skirt | Clothing (lower) |

| 6 | pants | Clothing (lower) |

| 7 | belt | Clothing (lower) |

| 8 | bag | Accessory (preserved) |

| 9 | hat | Accessory (preserved) |

| 10 | scarf | Clothing (upper) |

| 11 | glasses | Accessory (preserved) |

| 12 | arms | Body |

| 13 | hands | Body |

| 14 | legs | Body |

| 15 | feet | Body |

| 16 | torso | Body |

| 17 | jewelry | Accessory (preserved) |

The "preserved" labels indicate regions that are typically kept intact during virtual try-on. The model learns to distinguish between clothing that should be swapped and elements that should remain unchanged.

More Examples

The model handles various poses and clothing styles.

Precise boundary detection for complex garments.

Why We're Open-Sourcing This

Virtual try-on technology has enormous potential to transform e-commerce, reduce returns, and make online shopping more accessible. But building these systems from scratch requires significant resources.

By open-sourcing the human parser, we're:

- Lowering the barrier to entry for researchers and developers exploring virtual try-on

- Contributing back to the open-source community that powers much of our work

- Building toward something bigger: the parser is a prerequisite for the try-on model we're releasing next

What's Coming Next

This release is Phase 1 of our open-source initiative.

Phase 2 will be the main event: open-sourcing FASHN VTON Core, our virtual try-on pipeline. The human parser you're seeing today is a critical dependency that the try-on model will build upon.

By releasing the parser first, you can start integrating it into your workflows now and be ready when the full try-on model drops.

Get Started Today

- Try it instantly: HuggingFace Space (no code required)

- Quick prototyping: HuggingFace Model (2 lines of code)

- Production use: PyPI Package (

pip install fashn-human-parser) - Full control: GitHub Repository (complete source code)

We'd love to hear what you build with it. Join our Discord community to share your projects, ask questions, and stay updated on Phase 2.